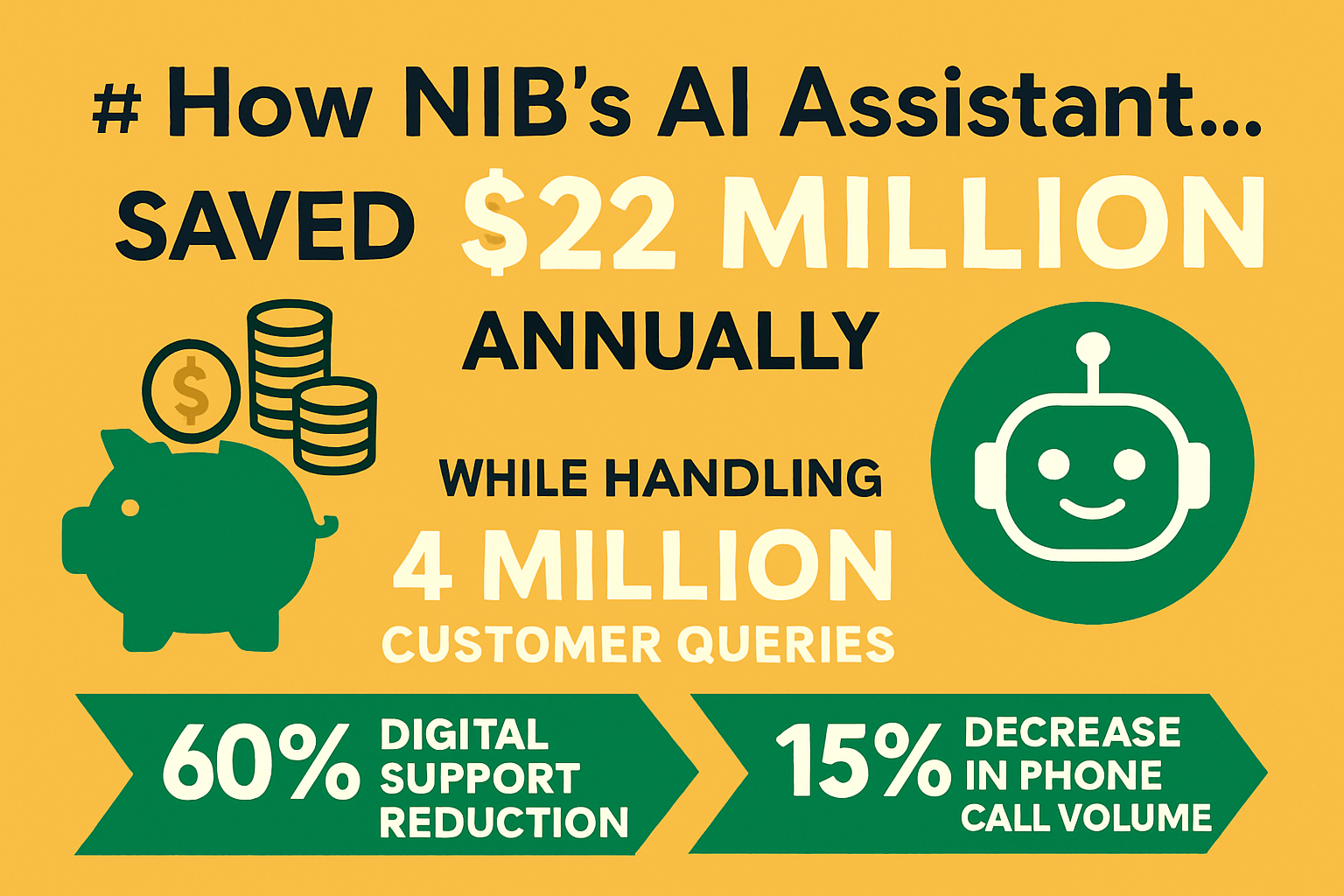

NIB deployed an AI-powered digital assistant named Nibby in 2021 that reduced human digital support requirements by 60% while handling over 4 million member queries. The result: $22 million in annual cost savings without degrading customer experience or requiring layoffs. Human agents didn’t disappear. They stopped answering routine questions about claim status and coverage details, focusing instead on complex health management tasks requiring empathy and judgment.

Phone call volume to customer service decreased 15% as customers chose to interact with Nibby for quick answers rather than waiting on hold for human agents. The AI didn’t just deflect calls to self-service. It resolved issues completely, providing answers and taking actions that previously required human intervention.

Here’s the counterintuitive part: most enterprise AI deployments fail to deliver meaningful ROI despite massive investments. NIB succeeded where most companies fail by starting with a specific high-volume use case (customer support inquiries), deploying proven technology (Amazon Bedrock and Anthropic’s Claude), and measuring results rigorously (cost savings, call reduction, query resolution). No revolutionary breakthroughs required. Just disciplined execution of AI deployment focused on clear business value.

The Customer Support Cost Structure

Health insurance customer support operates under brutal economics. Members contact insurers frequently about claims status, coverage questions, policy changes, and billing inquiries. Each interaction costs $5-15 when handled by human agents once you account for labor, training, infrastructure, and overhead. With millions of members generating tens of millions of annual inquiries, these costs accumulate into hundreds of millions annually.

Traditional cost reduction approaches involved offshoring to lower-wage regions, implementing rigid self-service portals forcing customers to navigate complex menus, or limiting support hours to reduce staffing needs. These approaches reduced costs but often degraded customer experience, creating dissatisfaction that manifested in member churn requiring expensive acquisition spending to replace lost customers.

The cost structure also included hidden inefficiencies. Human agents spent substantial time on after-call work: documenting interactions, updating systems, researching answers, and coordinating with other departments. A 10-minute customer conversation might require 5 additional minutes of administrative work, meaning agents spent 33% of their time on non-customer-facing activities that provided no direct value to members.

The staffing model also struggled with demand volatility. Customer inquiries spike during enrollment periods, after claims denials, and during health crises. Maintaining staff for peak demand meant excess capacity during normal periods. Understaffing for average demand created poor service during peaks. This capacity planning challenge meant companies either paid for idle staff or accepted periodic service failures.

Training costs also accumulated substantially. Health insurance is complex, with policies varying by state, employer, and individual circumstances. New agents required months of training before handling inquiries competently. Agent turnover averaging 30-40% annually in call centers meant continuous training cycles consuming substantial resources.

NIB recognized that the customer support cost structure had fundamental economic problems that incremental improvements couldn’t solve. Only technology capable of handling high inquiry volumes at near-zero marginal cost could transform the economics. AI represented the first technology with potential to deliver this transformation if implemented effectively.

The 60% Digital Support Reduction

Nibby reducing human digital support requirements by 60% represents extraordinary automation success compared to typical chatbot deployments that handle 20-30% of inquiries. Understanding how NIB achieved this level requires examining what makes their implementation different from failed attempts.

The 60% reduction came from Nibby handling both simple and moderately complex inquiries that traditional chatbots struggled with. Simple inquiries (“what’s my deductible?” or “where do I submit claims?”) are straightforward for any chatbot. Moderately complex inquiries requiring context and judgment (“my claim was denied, what can I do?” or “which plan covers this specific procedure?”) traditionally required human agents because rule-based chatbots couldn’t handle the nuance.

Nibby’s use of Claude 3.5 Sonnet provides natural language understanding capable of grasping customer intent even when expressed ambiguously or colloquially. A member asking “why didn’t you pay for my surgery?” might mean their claim was denied, processed partially, or not yet processed. The AI understands context from the conversation and customer history to interpret the actual question and provide relevant responses.

The system also accesses NIB’s customer databases in real-time, pulling individual policy details, claim histories, and account information to provide personalized responses rather than generic answers. When a member asks about coverage, Nibby responds based on their specific policy rather than general plan information that might not apply to them.

The triage capability particularly drives the 60% reduction. Nibby doesn’t just answer questions. It routes complex cases to appropriate human specialists rather than general support queues. A member with a claim appeal goes directly to the appeals team. Someone needing prior authorization connects to clinical reviewers. This intelligent routing reduces transfer time and ensures customers reach specialists who can actually resolve their issues.

The 60% reduction also benefited from continuous learning. As Nibby interacts with members, NIB’s team reviews conversations where the AI struggled or where customers expressed dissatisfaction. They use these examples to improve responses, expand capabilities, and refine handling of edge cases. This continuous improvement means the 60% reduction represents current state, with potential for further improvement as the system matures.

The $22 Million Annual Savings

The $22 million annual cost savings represents the most concrete validation of Nibby’s business value. Understanding how NIB calculated this number and what it includes provides insight into AI deployment ROI assessment.

The savings primarily come from avoided human support costs. With Nibby handling 60% of digital support inquiries, NIB needs fewer agents to maintain service levels. At $50,000-70,000 per agent in fully loaded costs (salary, benefits, training, infrastructure), reducing headcount needs by hundreds of agents creates multi-million dollar savings.

The calculation likely includes both actual headcount reduction and avoided hiring. NIB’s customer base grew during the period, which would traditionally require hiring additional support staff. Nibby enabled serving more members without proportional staffing increases, creating savings through growth accommodation without corresponding cost growth.

The 15% phone call reduction also contributes substantially to savings. Phone support costs more per interaction than digital channels due to longer handling times and higher per-minute costs. Reducing phone volume by 15% while maintaining digital support requirements cut total support costs significantly. Some members who would have called instead use Nibby, avoiding the higher cost of phone interactions.

The after-call work reduction of up to 20% improves agent productivity without reducing headcount. Agents spending less time on administrative tasks after conversations can handle more customer interactions per shift. This productivity improvement means the same number of agents can support larger member bases or handle more complex cases requiring extended time.

The savings calculation also likely includes efficiency improvements in claim processing and issue resolution. When Nibby handles routine inquiries quickly, members experience faster issue resolution reducing follow-up contacts. A member who gets immediate answers about claim status doesn’t call back again three days later to check on progress, eliminating redundant contacts.

The $22 million represents recurring annual savings, not one-time implementation costs amortized over multiple years. This ongoing benefit accumulates into hundreds of millions over the system’s operational lifetime, dwarfing the initial development and deployment investment.

The 4 Million Query Resolution Scale

Handling over 4 million member queries since launch demonstrates Nibby’s scalability in ways traditional support models couldn’t match. This volume handled without proportional cost increases validates AI’s fundamental economic advantage for high-volume repetitive tasks.

Traditional support models have linear scaling economics: doubling query volume requires roughly doubling staff. AI support has dramatically different economics: once developed, handling 4 million queries costs only marginally more than handling 1 million because the incremental cost per interaction approaches zero.

The 4 million queries also provided massive training data improving Nibby’s effectiveness. Each conversation teaches the AI about common questions, effective responses, and situations requiring human escalation. This accumulated learning means Nibby’s 4 millionth conversation benefits from lessons learned in the previous 3,999,999 interactions.

The query volume also validates that members accept and prefer AI support when implemented well. Four million queries represent millions of members choosing to interact with Nibby rather than seeking human agents. This adoption validates that AI support doesn’t just reduce costs. It provides member experiences that customers find satisfactory or even preferable to traditional support channels.

The scale also demonstrates operational reliability. Handling millions of queries requires system stability and performance that experimental AI deployments often lack. Nibby’s ability to operate consistently at this scale without major failures or service disruptions shows enterprise-grade implementation rather than prototype-level technology.

The Technology Stack Decisions

NIB’s choice to use Amazon Bedrock and Anthropic’s Claude 3.5 Sonnet rather than building proprietary AI or using simpler chatbot technology reflects sophisticated thinking about build-versus-buy decisions for enterprise AI.

Amazon Bedrock provides managed infrastructure for running large language models without requiring NIB to become AI infrastructure experts. Bedrock handles model hosting, scaling, security, and updates, allowing NIB to focus on application development rather than infrastructure management. This managed approach reduces deployment time and operational complexity compared to self-hosting models.

Claude 3.5 Sonnet represents one of the most capable language models available, providing natural conversation abilities and reasoning that earlier chatbot technologies couldn’t match. The model’s ability to understand context, handle ambiguity, and maintain coherent multi-turn conversations enables the 60% automation rate that simpler rule-based systems couldn’t achieve.

The decision to use commercial AI models rather than training proprietary models from scratch reflects practical assessment of NIB’s core competencies. NIB excels at health insurance operations, not at fundamental AI research. Using commercially available state-of-the-art models enables accessing cutting-edge capabilities without requiring world-class AI research teams.

The technology choices also provide upgrade paths as AI capabilities improve. When Anthropic releases better models, NIB can potentially upgrade Nibby’s underlying AI without rebuilding the entire application. This future-proofing ensures continued improvement rather than building on technology that becomes obsolete.

The Human Agent Reallocation

The 60% reduction in human digital support requirements didn’t translate to 60% workforce reduction. NIB reallocated agents to higher-value activities requiring human expertise rather than just eliminating positions. This approach addresses both economic optimization and employee concerns about AI displacement.

Agents previously handling routine inquiries moved to specialized roles requiring empathy, judgment, and complex problem-solving. Health management support for members with chronic conditions benefits from human agents who can build relationships and provide emotional support alongside technical assistance. Complex claim appeals require understanding nuanced circumstances and advocating for members in ways AI cannot.

The reallocation also improved job satisfaction for support staff. Agents prefer engaging in meaningful conversations helping members navigate difficult health situations over repetitively answering “what’s my deductible?” for the hundredth time. The shift to higher-value work made support roles more fulfilling and reduced turnover, creating additional cost savings through improved retention.

The transition also involved retraining agents for new responsibilities. Staff previously handling general inquiries received training in specialized areas like case management, appeals support, or clinical coordination. This investment in workforce development created career advancement opportunities rather than just eliminating positions, building loyalty and institutional knowledge.

The Customer Experience Transformation

The AI implementation improved rather than degraded customer experience despite reducing human interaction. This outcome contradicts common assumptions that automation necessarily worsens service quality.

Members benefit from immediate responses rather than waiting for available agents. Nibby answers inquiries 24/7 without hold times or business hour restrictions. A member wondering about coverage at 11pm receives instant answers rather than waiting until morning to call support. This immediacy creates superior experiences for time-sensitive questions.

The consistency also improves experiences. Human agents vary in knowledge, communication skills, and helpfulness. Some provide excellent service while others struggle. Nibby provides consistently accurate responses based on NIB’s policies and each member’s specific coverage. This consistency eliminates the lottery of whether you reach a helpful agent or struggle with someone less knowledgeable.

The self-service empowerment also appeals to members who prefer solving problems independently rather than explaining situations to strangers. Many people find calling customer support frustrating and prefer digital self-service when it actually works. Nibby provides effective self-service that traditional web portals couldn’t deliver because it understands natural language rather than requiring navigation through rigid menu structures.

The experience improvement shows up in the 15% phone call reduction. Members choosing digital channels over phone calls signal satisfaction with AI support quality. If Nibby provided poor experiences, members would avoid it and call instead. The call volume decrease validates that members find Nibby helpful enough to prefer it over speaking with humans.

The Scalability Without Proportional Costs

NIB’s ability to grow their customer base without corresponding staffing increases demonstrates AI’s transformative economic impact on service business models. This scalability represents the fundamental value proposition that makes AI strategically important beyond just cost reduction.

Traditional service businesses face linear scaling constraints: serving twice as many customers requires roughly twice as many employees. This creates economic challenges for growth because maintaining profit margins while scaling requires either raising prices or accepting lower quality. Most mature service businesses eventually saturate markets because scaling economics prevent profitable expansion.

AI breaks this constraint by handling marginal volume at near-zero incremental cost. Once Nibby was deployed, serving NIB’s 500,000th member cost essentially the same as serving their 100,000th member. This dramatically different scaling dynamic enables profitable growth that wouldn’t be economically viable with traditional labor-intensive support models.

The scalability also provides strategic flexibility. NIB can pursue growth opportunities, enter new markets, or offer additional services without the constraint of proportionally scaling support infrastructure. This flexibility enables strategies that fixed-cost structures would make impossible.

The scalability advantage also compounds over time. As NIB grows, the per-member support cost continues declining because infrastructure costs get amortized across more members while marginal support costs remain minimal. This creates improving unit economics that traditional models cannot achieve.

The Australian AI Adoption Context

NIB’s success stands in stark contrast to broader Australian business AI adoption where most companies report AI not meeting expectations. This divergence reveals important lessons about what distinguishes successful AI deployment from failures.

Most Australian businesses approached AI with vague goals like “improve efficiency” or “enhance customer experience” without defining specific measurable outcomes or focusing on particular use cases. NIB focused explicitly on customer support automation with clear metrics: reduce support costs, decrease call volume, and handle specific query volumes. This focus enabled measuring success and iterating based on concrete results rather than ambiguous qualitative assessments.

The technology choices also differ. Many companies deployed simple chatbots using basic pattern matching that frustrated customers with irrelevant responses. NIB invested in sophisticated language models capable of actually understanding customer inquiries and providing helpful responses. The technology quality difference directly affected outcome quality.

The expectations management also matters. Companies expecting AI to revolutionize entire businesses face disappointment when implementations deliver incremental improvements. NIB expected AI to transform customer support specifically, a realistic goal that the technology could actually achieve. This appropriate scoping prevented the disappointment that comes from unrealistic expectations.

The Continuous Improvement Approach

NIB continues exploring new areas for AI integration beyond customer support, leveraging Nibby’s success to drive further innovation. This continuous improvement approach treats AI as ongoing capability development rather than one-time project completion.

The success with customer support automation provides organizational confidence in AI deployment enabling expansion into new areas. Teams can point to Nibby’s concrete results when proposing AI investments in other functions, overcoming skepticism that prevents initial adoption attempts.

The accumulated expertise also accelerates future AI projects. NIB’s teams learned how to deploy AI effectively, integrate with existing systems, measure results accurately, and manage change. This institutional knowledge transfers to new projects, reducing implementation time and risk compared to their first AI deployment.

The continuous improvement also includes enhancing Nibby itself. As AI technology advances, NIB can upgrade underlying models, add new capabilities, and improve integration with operational systems. This ongoing enhancement ensures Nibby remains state-of-the-art rather than becoming obsolete technology that needs complete replacement.

The Industry Model Implications

NIB’s success provides a replicable model for insurers and service businesses seeking customer support automation. The key lessons transfer across industries facing similar high-volume inquiry challenges.

The focused use case approach works across industries. Start with specific high-volume repetitive interactions that AI can handle well rather than attempting to automate everything simultaneously. Prove value in one area before expanding scope.

The technology choice lessons also transfer. Invest in sophisticated AI capabilities rather than deploying basic chatbots that frustrate customers. The incremental technology cost gets quickly recovered through better automation rates and customer satisfaction.

The human reallocation strategy addresses workforce concerns. Position AI as enabling staff to focus on higher-value work rather than framing it as pure headcount reduction. This messaging reduces resistance and maintains institutional knowledge.

The measurement discipline ensures accountability. Define clear metrics for success before deployment and track them rigorously. This enables course correction when results don’t meet expectations and validates investments when they succeed.

Your Strategic Response Path

For organizations considering customer support AI, NIB’s approach provides a proven playbook rather than requiring experimental development.

Start by quantifying current customer support costs broken down by inquiry types, channels, and complexity. This baseline identifies high-volume repetitive inquiries most suitable for AI automation and establishes metrics for measuring success.

Invest in sophisticated AI technology rather than basic chatbots. The incremental cost difference between basic and advanced AI is minimal compared to the massive performance difference. Claude-level language models cost more than simple pattern matching but deliver dramatically better results.

Plan for human workforce transition rather than just reduction. Identify higher-value activities where relocated staff can provide greater impact. This protects institutional knowledge while improving job satisfaction.

Measure results rigorously including cost savings, automation rates, customer satisfaction, and operational metrics. Concrete measurements validate investments and guide continuous improvement.

The Future of Service Operations

Customer service is transitioning from labor-intensive operations to AI-augmented models where humans focus on complex high-value interactions while AI handles routine inquiries. The companies that successfully make this transition build sustainable cost advantages and scaling capabilities that labor-dependent competitors cannot match.

NIB proved that $22 million annual savings and 60% automation rates are achievable realities rather than just theoretical possibilities. The question isn’t whether customer support AI works. It’s whether your organization will deploy it while it still provides competitive advantages or wait until competitive pressure forces reactive adoption.

Handling 4 million queries without proportional cost increases isn’t revolutionary technology. It’s disciplined deployment of proven AI focused on clear business value rather than pursuing impressive but impractical capabilities.